This time I really had to split up my look back to EclipseCon Europe into multiple posts. In my previous post I wrote my rememberings on ECE 2017 before it actually began. It was about my arrival and first meetings with the community, the Unconference on Monday and the reception and networking at the Nestor bar.

On Tuesday it was finally time to open the doors for EclipseCon Europe! It is already beginning at the reception and wardrobe where you meet lots of people and welcome them. Of course there is not much time then, but I was looking forward to find more time for chatting later.

Preparing for showtime

Tuesday morning is the tutorials slot. I would have liked to join Mickael Istria’s slot on the Language Server Protocol and Eclipse’s Generic Editor. This is definetely a topic where I will bring my experience in and will contribute to. Instead, I chose to prepare for my first talks on that day.

All itemis colleagues met at our booth and we built it up together. The booth played a central role for us later and we wanted to make it an interesting place to be for attendees also. And hey – we had stickers this year again! I ordered them recently and improved my Gimp skills to make them free form cuts. The quality is good, and now we know where to order good ones.

At noon it was time to meet up again. Not sure with whom I joined that day. I think it was Tuesday where I joined Lorenzo Bettini and Francesco and Vincenso from RCP Vision. Or already Monday? I have an information overload to get this correct in a row.

Keynotes

After lunch it was time for the opening keynote from Mike Milinkovich. But before that, Ludwigsburg’s mayor gave an insight on Ludwigsburg’s history. You may have read it, the Eclipse Foundation and Ludwigsburg agreed to keep EclipseCon Europe further for at least two years there. Good choice. The location is just perfect for this conference.

Then it became time for Mike to welcome the audience and have an outlook on the future of Eclipse. These are exciting times, many projects are joining the Eclipse Foundation. With the move of JavaEE to EE4J at Eclipse, not less than 40 new projects are pushing in, and GlassFish alone has 130 git repos. It will be a challenging task to spark this project. I don’t want to get into the details now, but OpenJ9, Deeplearning4J are other awesome projects at the Foundation now. Eclipse Science is also getting things rolling and are successful on their own release train now. The Eclipse IDE supports now Java 9 and JUnit 5 and has been added to Eclipse Oxygen.1a and Photon. The Eclipse Public License 2.0 has been released this year, and JUnit 5 was the first project licensed under EPL-2.0.

I have to admit that I was distracted during Anna Ståhlbröst’s keynote then. I partially followed and enjoyed how she presented live in Luleå, near to the polar circle in northern Sweden. The reason for distraction was that it was immediately after the keynote time for my next talk.

itemis Booth Talks

Now it was finally time for an idea that we had for our booth. The idea was to use our technical and presentation skills and give short talks at the booth. The short break between the keynotes and the afternoon talks was used by Lothar Wendehals, and he showed in just 5 minutes how to program an embedded system with our YAKINDU Statechart Tools. And it took not more time until he programmed an Arduino to do something which he modeled as a finite state machine.

Showtime!

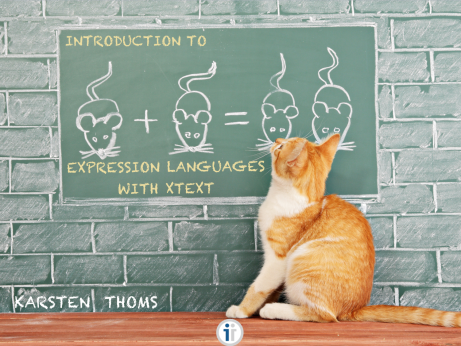

In “Introduction to Expression Languages with Xtext” I showed how to build languages in Xtext that embed expressions. There is Xbase, which can be easily consumed and implements a fully-fledged base expression language. So why not just use Xbase? There are reasons sometimes not to do so. Mostly because of three reasons: Performance, the need for another typesystem (Xbase is bound to the Java typesystem), or when no dependency on JDT is required.

But you can learn a lot from the Xbase grammar. When you look at this grammar, it seems complex and makes much use of some more advanced Xtext grammar concepts like Syntactic Predicates or Assign Actions. Many Xtext users are not aware of that, or don’t understand them. So I explained them and where to use them, and I showed some patterns that any expression language follows in Xtext. The patterns are not that visible on first sight, but I gave another view on them.

With a slight delay because of after-session discussion I joined Martin Lippert’s talk “Implementing Language Servers – the Good, the Bad, the Ugly“. Martin did a great presentation about lessons learned from implementing and using multiple language  servers, and the state of the LSP. If you are interested in LSP and missed it, look for the recorded session. I gave Martin later direct feedback on his good performance (as he always does).

servers, and the state of the LSP. If you are interested in LSP and missed it, look for the recorded session. I gave Martin later direct feedback on his good performance (as he always does).

It was right before the first session when Dominik Mohilo from jaxenter.de showed up. He is continuously reporting interesting stuff in his online articles for years now, and helping Eclipse to get more intention in the developers’ minds in Germany. Thus I really value his work and was excited to finally meet him in person. Many thinks about Eclipse I read from him first, because they show up in my Facebook timeline and often I read those articles. He was even more excited to finally meet the drivers behind Eclipse in person. He wrote already a good wrap up about ECE 2017 for his Eclipse Weekly newsletter (german).

News on Photon, GEF and the Jigsaw

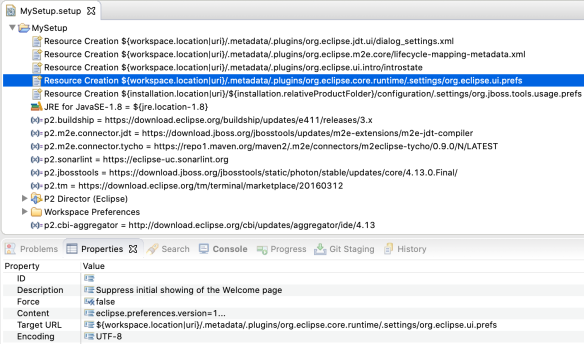

I invited Dominik to join our next ignite talks. Now it was my time to present brand new stuff. I showed some of the things that were added from Photon milestone 1 to 3, and even some of the things that are just about to come by my contributions. Besides some nice usability features and now fully working dark theme, I showed one of the most important things that users can await:

I am investigating from time to time where I think Eclipse could perform better and if I have ideas on that. Some additions that I am proud of are the “Expand All” action in the Search Views’s tree. I showed both Oxygen and Photon for a search result of ~5000 elements, and the improvement is at least factor 2. Also in this demo you could see another nice feature coming for macOS: The wait-cursor was an ugly black/white circle. I found a hidden gem in macOS to get an animated system cursor. Actually it is so hidden that you just hear rumors on this blue spinning ball on the net and almost find no pictures of it with a Google search. It is not documented, but I found a hint for it in a source file of macOS. I think it looks now much nicer.

Also the improved speed of progress monitor updates I could show in difference between Oxygen and Photon, which is just amazingly faster now. You will recognize this for example if you import projects from the Git Repository view. At the moment I am analyzing the performance of the (Java) text editor and have some patches pending. On my machine it feels already snappy again. I think the conference attendees around enjoyed my performance.

Next it was Alexander Nyßen to talk about “How we saved GEF from the Jigsaw”. To the luck of the GEF project they were one of the very early adopters of Java 9 and tested what implications Java 9 brought for GEF. They wanted of course to assure that GEF will be compatible to Java 9 once it is out, and found some issues. And they got in direct contact with the guys from Oracle to request some changes GEF and others would need. Users of GEF can be happy now, GEF works smoothly on Java 9!

Next it was Alexander Nyßen to talk about “How we saved GEF from the Jigsaw”. To the luck of the GEF project they were one of the very early adopters of Java 9 and tested what implications Java 9 brought for GEF. They wanted of course to assure that GEF will be compatible to Java 9 once it is out, and found some issues. And they got in direct contact with the guys from Oracle to request some changes GEF and others would need. Users of GEF can be happy now, GEF works smoothly on Java 9!

My afternoon talk schedule

The next session I attended was Andreas Graf’s presentation “Large Scale Model Transformations with Xtend“. As written in my previous post, model transformations are one of the main use cases for Xtend, but only known by a few people. Andreas is working in the automotive sector, where you usually have VERY large models, sometimes hundreds of megabytes in source. Transforming them is challenging, and they initially did an evaluation on different technologies (e.g. QVT-O). They finally decided to use Xtend. Some of the reasons were Xtend’s performance and debuggability. Did you ever tried to debug an interpreter on a large model? It’s horrible. With Xtend you can just debug the code and also down to its transpiled Java code. And they do not experience serious bugs with Xtend.

The next session I attended was Andreas Graf’s presentation “Large Scale Model Transformations with Xtend“. As written in my previous post, model transformations are one of the main use cases for Xtend, but only known by a few people. Andreas is working in the automotive sector, where you usually have VERY large models, sometimes hundreds of megabytes in source. Transforming them is challenging, and they initially did an evaluation on different technologies (e.g. QVT-O). They finally decided to use Xtend. Some of the reasons were Xtend’s performance and debuggability. Did you ever tried to debug an interpreter on a large model? It’s horrible. With Xtend you can just debug the code and also down to its transpiled Java code. And they do not experience serious bugs with Xtend.

I skipped one slot and went to our booth, where I joined some discussions with attendees and colleagues. Then it became time to listen to Olaf Gunkel’s talk “the Future is async – or Java Concurrency in the change of time“. He explained concurrency programming from Java 1.0 to Java 9, and the different paradigms. I actually learned quite something about the newest stuff, reactive streams. Also Completable Future I do not use frequently – until yet.

Eclipse Stammtisch

That was the last talk for Tuesday and finally time to get beer at the Stammtisch, which took place in the theater’s foyer where our booth was. We made a small “flash mob”: All of us got the same T-Shirt, one with the funny bunny we had on our rollup at EclipseCon France. And suddenly it could be recognized how large the itemis crowd was. Around 20 persons from itemis, from which 8 were speakers with 12 talks.

It must have been a nightmare for the EclipseCon’s program committee to choose from so many talks. Of course they will tend to provide a diverse program and don’t let single companies a too big focus. But that we were able to give 12 talks shows that they believed in the quality of our content and presentation. And no, we had no one from itemis at the program committee.

I could not remember to whom I spoke at the reception all to, but one was different: I met Brian de Alwis, who made all the way from Canada to EclipseCon Europe. It was at EclipseConverge & DevoxxUS where I got to know him. We had the same shitty over-expensive hotel and met at breakfast. It was always refreshing to speak with him, a very nice guy. Actually it was him who partially sparked my interest to contribute to Eclipse Platform. The story behind would be worth another post.

The real conference

The party was of course not over when the last beer was served at the reception. It just begun. Of course it was again time to go over to Nestor, drink more beer and talk with all the Eclipse members that were joining there. At the bar there is happening the real conference. This is were you really have time to talk and learn to know new people. Year by year it gets more. At 1:30 AM I finally got to my room.

Oh gosh, and this was just Tuesday! Wednesday would become even more intense, read about that in my next post. Our slides are available for download now.

servers, and the state of the LSP. If you are interested in LSP and missed it, look for the recorded session. I gave Martin later direct feedback on his good performance (as he always does).

servers, and the state of the LSP. If you are interested in LSP and missed it, look for the recorded session. I gave Martin later direct feedback on his good performance (as he always does).

Next it was Alexander Nyßen to talk about “How we saved GEF from the Jigsaw”. To the luck of the GEF project they were one of the very early adopters of Java 9 and tested what implications Java 9 brought for GEF. They wanted of course to assure that GEF will be compatible to Java 9 once it is out, and found some issues. And they got in direct contact with the guys from Oracle to request some changes GEF and others would need. Users of GEF can be happy now, GEF works smoothly on Java 9!

Next it was Alexander Nyßen to talk about “How we saved GEF from the Jigsaw”. To the luck of the GEF project they were one of the very early adopters of Java 9 and tested what implications Java 9 brought for GEF. They wanted of course to assure that GEF will be compatible to Java 9 once it is out, and found some issues. And they got in direct contact with the guys from Oracle to request some changes GEF and others would need. Users of GEF can be happy now, GEF works smoothly on Java 9! The next session I attended was Andreas Graf’s presentation “

The next session I attended was Andreas Graf’s presentation “